How do you know the settings you use in your simulation are representative of the future real world?

It’s a question that sits at the very heart of simulation modelling; a ghost in the machine, if you will. We can build the most intricate, computationally dazzling models, but their predictive power hinges on a simple, yet profound, uncertainty: are the assumptions we’ve baked into our model a fair reflection of the world to come?

It’s the sort of question that can keep a conscientious modeller up at night. Are the cycle times, failure rates and arrival patterns we've so carefully measured and implemented merely relics of the past?

The honest, if slightly unsettling, answer is that we can never be 100% certain. The future is a slippery beast, and black swan events, by their very nature, are unmodellable until they’ve already happened. Yet, all is not lost. We are not simply gazing into a crystal ball; we are using a sophisticated tool to understand and prepare for a range of possible futures. The key lies not in predicting a single, certain future, but in building models that are robust, flexible and credible.

Here’s how we can gain confidence in our model's settings:

1. Define Your Boundaries and Assumptions with Rigour

Before you write a single line of code, you must be painstakingly clear about what is in your model and, just as importantly, what is out. This is especially true when you’re modelling a new system for which no historical data exists.

- If You Have Historical Data, Use It: If you are modelling an existing system, your first task is to build a model that, when fed historical inputs, produces outputs that closely mirror historical performance. If your model can’t accurately simulate what has already happened, it has little hope of predicting what might.

- When There's No Data, Assumptions are Your Bedrock: For new or conceptual systems, you must rely on other sources: manufacturer specifications for machinery, data from similar (but not identical) systems, or expert opinion. The key is to treat these assumptions as the variables they are. Document them, debate them, and be ready to defend them.

- Engage the Experts: The people on the ground, the operators and domain experts, possess an invaluable, often tacit, knowledge of the system. They know the workarounds, the ‘real’ cycle times and the unofficial rules. Engage with them. Show them the model, have them critique it. If the model’s behaviour seems alien to them, that’s a significant red flag. This is qualitative validation, and it’s just as important as the quantitative kind.

2. Embrace Uncertainty with Sensitivity Analysis

Once your model is validated against the past, it’s time to start stress-testing its assumptions about the future. Instead of inputting a single value for a key parameter (e.g., an average cycle time of 5.2 minutes), treat it as a range of possibilities.

- One-at-a-Time (OAT) Analysis: Vary one input parameter at a time while holding others constant. How does a 10% increase in processing time affect overall throughput? What if demand is 20% lower? This helps you identify the most sensitive variables in your model – the ones that have a disproportionate impact on your key performance indicators.

- Scenario Analysis: Combine several parameter changes to create plausible future scenarios. What does a ‘high demand, high staff absence’ scenario look like? Or a ‘new efficient machinery, low demand’ scenario? This moves beyond simple parameter tweaking and into the realm of strategic planning.

3. The Power of Experimentation: Design of Experiments (DoE)

For complex models with many variables, a more structured approach is needed. Design of Experiments (DoE) is a powerful statistical method that allows you to efficiently explore the entire decision space. Instead of haphazardly changing variables, you design a series of experiments to systematically test the impact of different factors and, crucially, their interactions.

This is what elevates simulation from a simple “what-if” tool to a sophisticated laboratory for discovering optimal operating conditions under uncertainty.

4. The Human Element: Building Credibility

Ultimately, a simulation’s value is realised only when its results are trusted and acted upon. This is a human, not a technical, challenge.

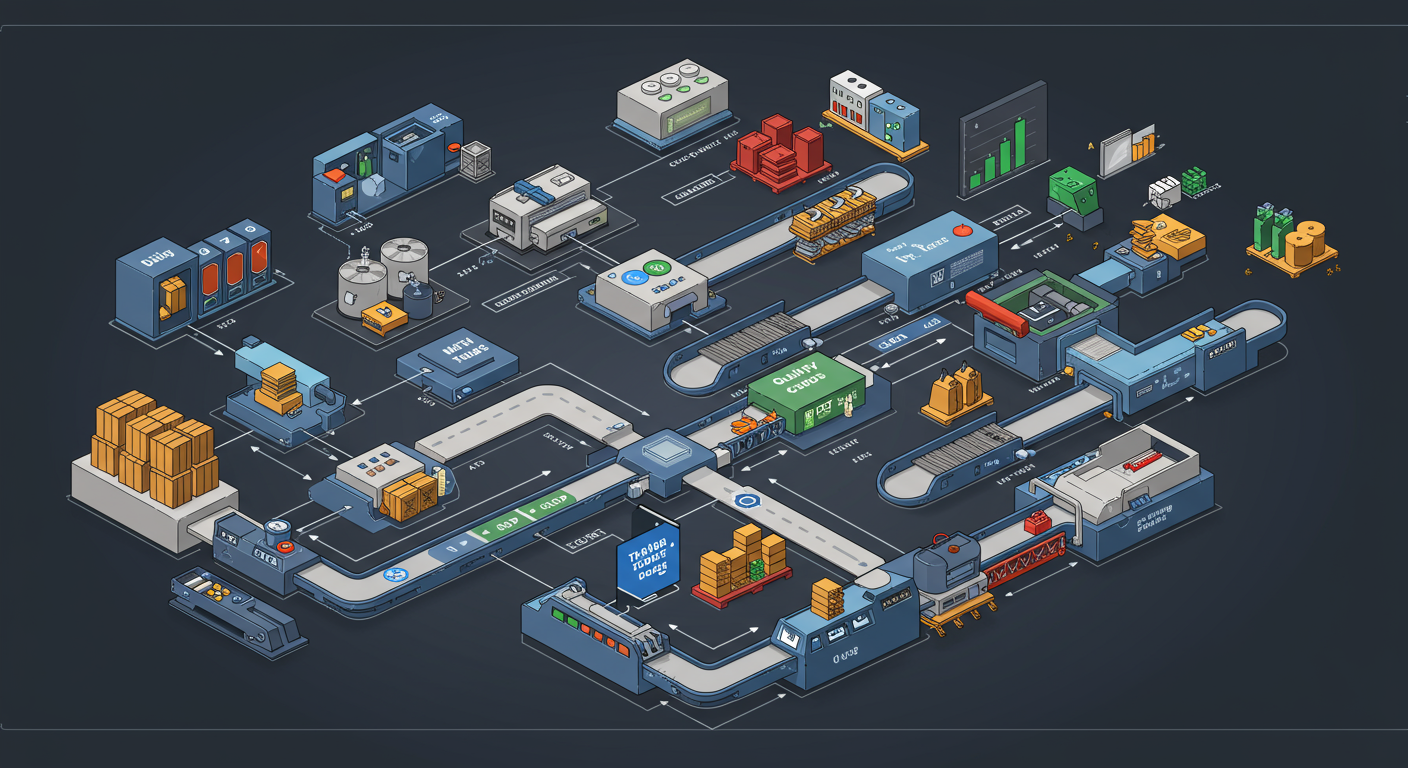

- Transparency is Key: Don’t hide your assumptions. Document them clearly. Make the model’s logic accessible. An animated, visual model can be incredibly powerful here, allowing stakeholders to ‘see’ the system in action and build confidence in its logic.

- Communicate in Ranges, Not Absolutes: When presenting results, avoid the temptation to give a single number. Present a range of likely outcomes. For instance, “Based on our analysis, throughput is likely to be between 450 and 520 units per day, with the most likely outcome being around 480.” This language honestly reflects the uncertainty of the future.

So, how do we know our settings are representative of the future? We don’t, not with absolute certainty. But by grounding our models in validated reality, systematically exploring uncertainty and building credibility with stakeholders, we can create powerful tools that help us navigate the complexities of the future with far greater confidence. We trade the illusion of a crystal ball for the practical wisdom of a well-drawn map.

Ready to move beyond basic “what-if” analysis and build truly robust, credible simulations?

- Master the fundamentals with my guide to simulation in Python using SimPy: https://www.schoolofsimulation.com/simpy-book

- Take the next step and become a go-to expert with my free masterclass: https://www.schoolofsimulation.com/free_masterclass

- For those ready for a deep dive, The Complete Simulation in Python with SimPy Bootcamp will equip you with industry-standard skills: https://www.schoolofsimulation.com/simulation_course