How Good is Gemini CLI vs Claude Code at Writing Simulations in Python with SimPy?

If you were to believe the chatter on the internet, you'd think that Claude Code, particularly the mighty Opus model, is the undisputed champion of AI-assisted coding. I've been putting both Claude and Google's Gemini CLI through their paces recently, and I must say, I'm not entirely convinced by the popular narrative.

Claude Opus is, without a doubt, an immense reasoning engine. It thinks, it iterates, it refines, and it thinks some more. There's a certain intellectual weight to its process that is undeniably impressive. However, this same quality can be its Achilles' heel. If you're not careful, Claude can lead you down some rather elaborate rabbit holes, constructing complex solutions that are a nightmare to navigate out of. It's a bit like hiring a verbose genius who occasionally gets lost in their own brilliance.

Gemini CLI, on the other hand, feels a little different. It might seem a touch more "unreliable" at first glance, but what it offers is conciseness. It gets the job done without the fuss, and frankly, I appreciate that. There's an elegance in its simplicity.

I suspect Gemini might be subtly smarter when dealing with data-centric problems. In a recent piece of client work involving the animation of simulation data, Claude immediately lunged at a solution using the various disparate data sets I had. Gemini, however, took a commendable step back. It suggested that we first consolidate the data into a single, coherent dataset and *then* proceed with the animation. That simple, logical step would have been completely bypassed by Claude, which would have merrily burrowed down its rabbit hole, leaving me to clean up the mess. It was a moment of clarity that saved me a world of pain.

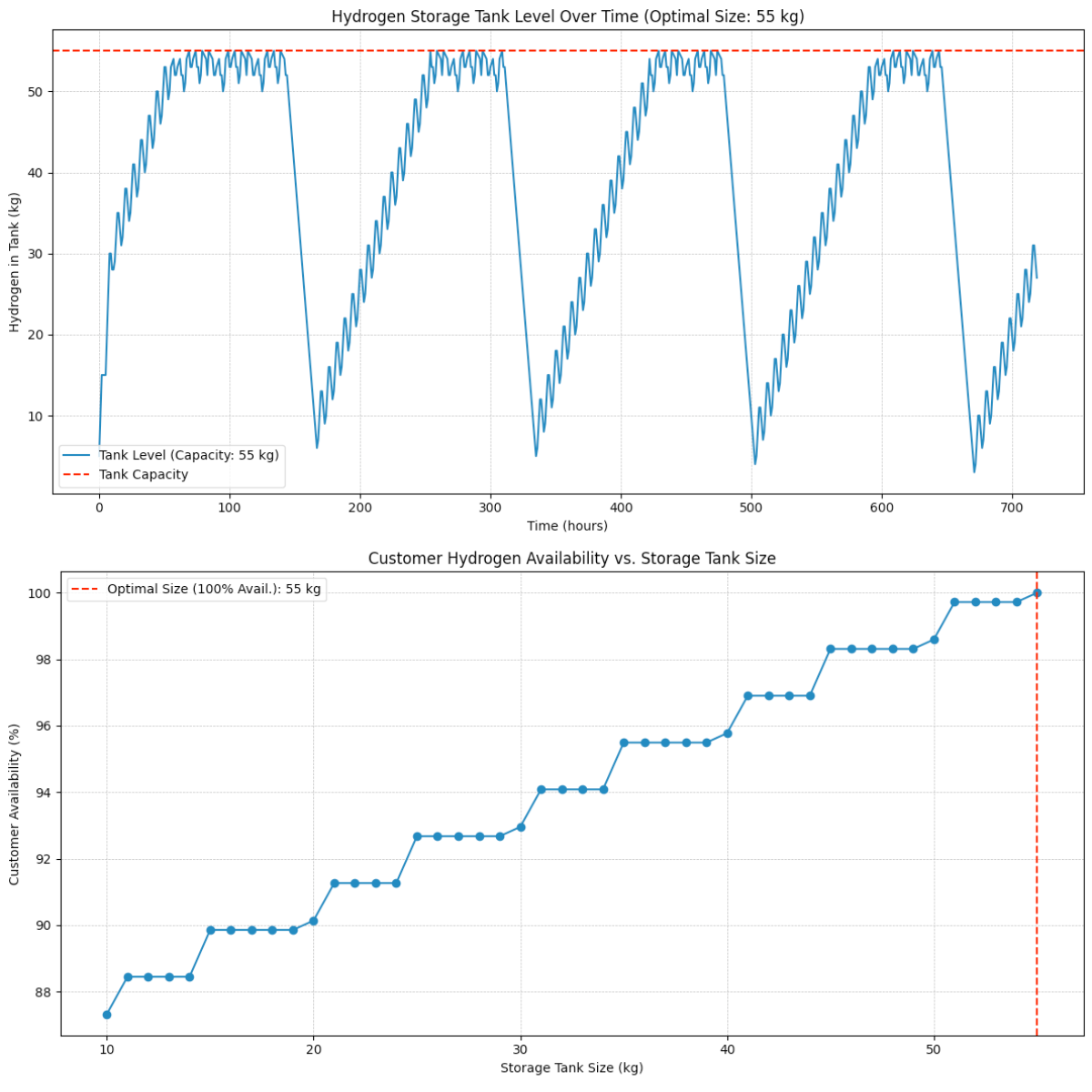

This brings me to the main event: the SimPy simulation benchmark test. I've just run it with Gemini CLI, and the results are, to put it mildly, startling. It nailed the problem, achieving a result closer to the mark than Claude Opus did. Not only that, but it did so while running more scenarios and using significantly fewer lines of code. You can see the full breakdown of the results in my benchmarking spreadsheet here.

What's particularly interesting is the continuity. While the code was structured differently from the solution I got from Gemini 2.5 Pro via the chatbot back in April, the first of our visualisations looked exactly the same. This is encouraging; it shows a consistent underlying capability. Yet, the CLI seems to work harder. It gave me a second chart, ran a broader range of scenarios, and, as I mentioned, did it all with a leaner script. The Gemini CLI team often talks about their aim for "extreme conciseness" in the default system prompt, and it truly shows. The response was robust, efficient, and deeply impressive.

So, bravo to the Gemini CLI team and the open-source community that supports it. For the specific, and often complex, task of writing SimPy simulations, I am officially declaring Gemini CLI to be in the lead.

Ready to Build Powerful Simulations?

AI can help, but the fundamentals are key. Break free from commercial software and learn to build industry-standard simulations in Python.

Join The SimPy Bootcamp